The healthcare sector is witnessing unprecedented integration of ai systems across clinical workflows, from diagnostic imaging to treatment planning and administrative support, often facilitated by a custom software development company. As artificial intelligence transforms patient care delivery, regulatory authorities worldwide are racing to establish comprehensive frameworks that balance innovation with patient safety. The current regulatory landscape for ai in healthcare regulation represents a complex web of federal guidance, state legislation, international standards, and voluntary industry practices that healthcare professionals and technology companies must navigate carefully through healthcare software modernization.

Understanding this evolving regulatory environment has become critical for healthcare organizations, ai developers, and medical device manufacturers seeking to leverage ai while remaining compliant with an increasingly sophisticated set of rules. From the FDA’s Software as a Medical Device frameworks to state-specific legislation governing ai algorithms in clinical decision-making, the regulatory requirements continue to expand in both scope and complexity.

Current State of AI Healthcare Regulation

The foundation of ai in healthcare regulation globally centers on Software as a Medical Device (SaMD) frameworks that treat ai tools as medical devices when they perform diagnostic, therapeutic, or monitoring functions. This approach recognizes that ai systems can materially impact patient care decisions and therefore require similar oversight to traditional medical devices.

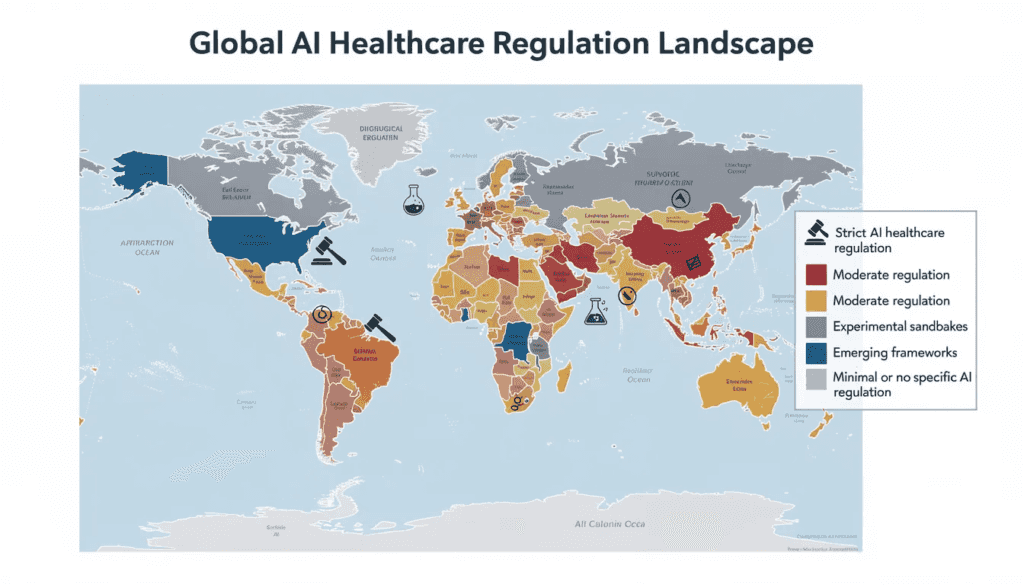

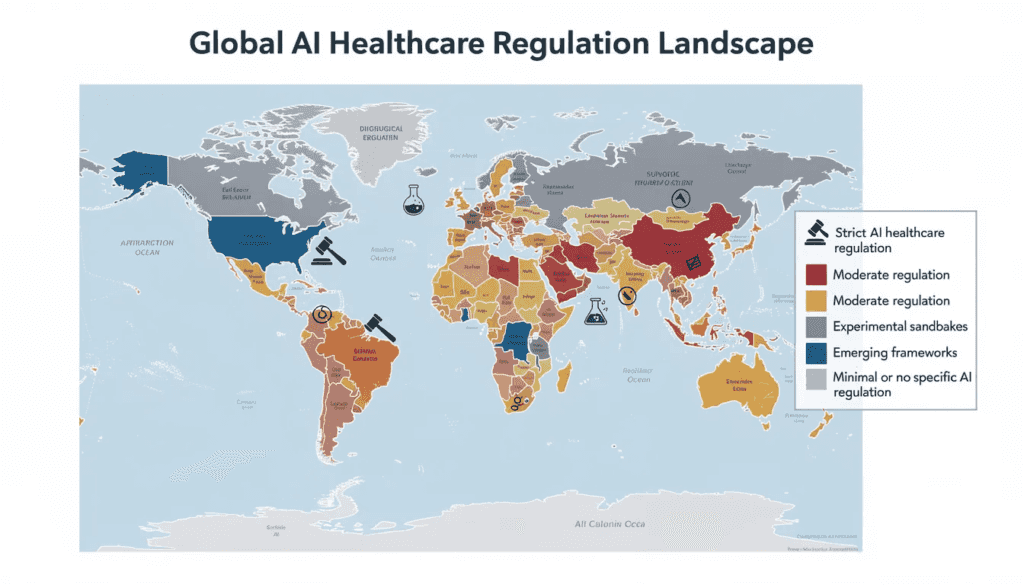

Key regulatory authorities have emerged as primary gatekeepers for ai in healthcare, each developing distinct approaches to oversight. The FDA leads medical device regulation in the United States, while the European Union’s Medical Device Regulation (MDR) governs ai tools across EU member states. The UK’s MHRA, Australia’s TGA, and China’s NMPA each maintain their own regulatory pathways for artificial intelligence systems in healthcare settings.

The regulatory landscape distinguishes between hard laws—legally binding requirements with enforcement mechanisms—and soft laws consisting of voluntary guidelines and industry standards. Currently, most ai governance relies heavily on soft law approaches, with regulatory authorities issuing guidance documents that encourage best practices while formal legislation catches up to technological advances.

Recent regulatory milestones include the FDA’s 2019 Proposed Regulatory Framework for Modifications to Artificial Intelligence/Machine Learning-Based Software as a Medical Device and the subsequent 2021 AI/ML-based SaMD Action Plan. These documents established the foundation for lifecycle management of ai tools, emphasizing the need for predetermined change control plans and continuous monitoring of real-world performance.

The regulatory framework also addresses the distinction between Clinical Decision Support (CDS) tools and actionable medical devices. The 21st Century Cures Act created exemptions for certain CDS functions, but ai systems that independently analyze data and provide specific diagnostic or treatment recommendations typically fall under device regulation.

Global Regulatory Approaches by Jurisdiction

The international regulatory landscape for artificial intelligence in healthcare varies significantly across major jurisdictions, reflecting different priorities, healthcare systems, and regulatory philosophies. This variation creates both opportunities and challenges for global ai developers and healthcare organizations.

United States Federal and State Initiatives

President Joe Biden’s October 2023 Executive Order on artificial intelligence mandated that the Department of Health and Human Services develop comprehensive AI policies for healthcare delivery, marking a significant federal commitment to regulating ai in healthcare. This executive order specifically requires federal agencies to address ai applications in health care services and establish guidelines for trustworthy artificial intelligence deployment.

The Centers for Medicare & Medicaid Services issued a critical Medicare Advantage final rule in April 2023 requiring that coverage decisions consider individual circumstances rather than relying solely on ai algorithms for medical necessity determinations. This rule directly impacts managed care plans and health plans using artificial intelligence systems for utilization review and prior authorization processes.

The FDA has embraced Good Machine Learning Practices (GMLPs) as a cornerstone of ai regulation, emphasizing data quality, algorithmic transparency, and clear boundaries for ai models. These practices require developers to demonstrate training data quality, implement robust validation procedures, and maintain human oversight throughout the ai systems lifecycle.

State-level regulation has accelerated dramatically, with 47 states introducing over 250 bills related to ai governance in 2025 alone, resulting in 21 states enacting 33 new laws. This represents the most significant expansion of ai-specific legislation in healthcare history, covering everything from chatbot disclosure requirements to restrictions on autonomous clinical decision-making.

European Union and United Kingdom

The European Union’s AI Act, finalized in 2024, establishes a comprehensive risk-based classification system for artificial intelligence systems in healthcare. High-risk AI systems—which include most diagnostic and therapeutic ai tools—must undergo rigorous conformity assessments, implement robust risk management systems, and maintain detailed documentation throughout their operational lifecycle.

The European Medical Device Regulation has been updated to address ai-specific requirements, including enhanced post-market surveillance obligations and requirements for algorithm transparency. These updates recognize that ai systems can evolve after market approval, necessitating continuous monitoring and validation.

The UK’s MHRA Software and AI as a Medical Device Change Programme focuses specifically on cybersecurity and bias mitigation in ai tools. This programme recognizes that ai systems face unique security vulnerabilities and can perpetuate or amplify healthcare disparities if not properly designed and monitored.

NICE (National Institute for Health and Care Excellence) has developed Evidence Standards Framework for Digital Health Technologies, providing specific guidance for evaluating ai tools’ clinical effectiveness and cost-effectiveness within the NHS healthcare system.

Asia-Pacific Regulatory Developments

China’s NMPA has issued technical guidelines emphasizing deep learning applications and clinical risk assessment protocols for ai in healthcare. These guidelines require comprehensive validation studies and ongoing performance monitoring, particularly for high risk ai systems used in diagnostic applications.

Singapore’s AI Verify governance testing framework, based on 11 international AI ethics principles, offers a sector-agnostic approach that healthcare organizations can voluntarily adopt. This framework emphasizes algorithmic fairness, transparency, and human-centricity in ai deployment.

Australia’s TGA implemented a risk-based classification approach for SaMDs in February 2021, establishing clear pathways for ai medical devices based on their potential impact on patient health outcomes. This system allows for streamlined approval of lower-risk ai tools while maintaining rigorous oversight for high-risk applications

Specific Areas of AI Healthcare Regulation

Regulatory authorities have identified several critical areas where ai applications in healthcare require targeted oversight due to their potential impact on patient safety, privacy, and health equity. These specialized regulatory frameworks address the unique risks and challenges associated with different types of artificial intelligence deployment.

The regulatory intensity varies significantly based on risk assessment, with high-risk applications like diagnostic ai tools facing more stringent requirements than administrative support systems. However, even seemingly low-risk applications can trigger regulatory requirements if they influence clinical decision-making or patient care pathways.

AI-Enabled Chatbots and Mental Health

California’s SB 243 established comprehensive requirements for ai chatbots in healthcare, mandating clear disclosure when patients interact with artificial intelligence systems and requiring crisis intervention protocols for mental health applications. This legislation recognizes that patients may not realize they’re communicating with ai systems, potentially affecting the therapeutic relationship and patient trust.

Illinois HB 1806 goes further by explicitly banning ai systems from engaging in direct therapeutic communication during psychotherapy sessions. This law prohibits artificial intelligence from making independent therapeutic decisions or generating treatment plans without human professional review, establishing clear boundaries for ai applications in mental health services.

Six states passed seven distinct laws in 2025 specifically addressing ai chatbots, with particular focus on protecting minors using AI-powered mental health applications. These laws recognize that young patients may be especially vulnerable to the limitations and potential risks of ai-driven mental health interventions.

The legislation typically requires that ai chatbots clearly identify themselves as non-human entities, provide mechanisms for immediate human intervention in crisis situations, and maintain detailed logs of interactions for quality assurance and regulatory compliance purposes.

Clinical Decision Support and Diagnostic AI

New Mexico HB 178 empowers the Board of Nursing to regulate ai use in clinical practice while maintaining requirements that nurses exercise independent clinical judgment. This law exemplifies the balance regulators seek between enabling ai tools and preserving professional clinical decision-making authority.

Over 20 bills were introduced in 2025 specifically setting guardrails for ai in clinical care, addressing concerns about algorithmic bias, transparency in clinical decision making process, and the need for provider oversight of ai-generated recommendations. These bills typically require human review of ai recommendations and prohibit fully autonomous clinical decisions.

Provider oversight requirements have become standard across jurisdictions, mandating that healthcare professionals remain responsible for final clinical decisions even when using ai tools for decision support. This approach maintains professional liability while enabling the benefits of artificial intelligence enhancement.

AI applications in radiology, pathology, and diagnostic imaging face particular regulatory scrutiny due to their direct impact on diagnostic accuracy and patient outcomes. These systems typically require FDA clearance as medical devices and must demonstrate performance equivalent to or superior to existing diagnostic methods. For organizations looking to develop compliant solutions, partnering with experts in custom software development can help ensure robust, regulatory-ready products.

Utilization Management and Prior Authorization

Federal regulation of ai in healthcare utilization management and prior authorization processes has intensified following concerns about algorithmic bias in coverage decisions. The regulatory framework now requires transparency when ai systems influence coverage determinations and mandates human review for adverse determination cases.

CMS issued its Interoperability and Prior Authorization final rule in January 2024, requiring health insurers to implement Prior Authorization APIs by 2027. This rule directly impacts how ai systems process prior authorization requests and ensures that patients and healthcare professionals can access decision-making information.

Approximately 60 bills were introduced addressing ai use by payors in 2025, with particular focus on fraud detection and quality management applications. These bills typically require disclosure when ai influences coverage decisions and provide appeal mechanisms for patients who believe they’ve been unfairly impacted by algorithmic decisions.

California’s legislative activity exemplifies state-level approaches to payer ai regulation. While AB 682 was vetoed, SB 306 passed with specific reporting requirements for ai systems used in health insurance operations, establishing precedent for other states considering similar legislation.

Regulatory Challenges and Implementation Issues

Colorado’s delay of SB 205 implementation highlights the practical challenges regulatory authorities face in balancing innovation promotion with patient safety and data privacy protection. The state recognized that overly restrictive regulations could stifle beneficial ai development while insufficient oversight could expose patients to unnecessary risks.

The complexity of ai technology often outpaces traditional regulatory frameworks designed for static medical devices. Unlike conventional medical devices, ai systems can evolve through machine learning, requiring new approaches to verification and validation that account for algorithmic changes over time.

Enforcement challenges plague soft-law approaches that rely on voluntary compliance rather than mandatory requirements. While guidance documents provide valuable direction, they lack the enforcement mechanisms necessary to ensure consistent implementation across the healthcare sector.

Technology companies developing ai tools face particular challenges in navigating the patchwork of state and federal requirements. A single healthcare claims management software product may need to comply with dozens of different regulatory requirements depending on where it’s deployed and how it’s used.

The rapid evolution of ai technology, particularly generative ai and large language models, presents ongoing challenges for regulatory authorities who must develop oversight mechanisms for technologies that didn’t exist when current frameworks were designed. This creates uncertainty for both developers and healthcare organizations seeking to implement cutting-edge ai tools.

Resource constraints affect both regulatory authorities and healthcare organizations attempting to comply with evolving requirements. Smaller healthcare organizations may lack the technical expertise necessary to properly evaluate ai tools or implement required oversight mechanisms.

Transparency and Accountability Requirements

California SB 53 requires large frontier AI developers to publish detailed risk mitigation frameworks starting in 2026, establishing new standards for transparency in ai development and deployment. This legislation specifically addresses the “black box” nature of many ai systems by requiring explainable decision-making processes.

Whistleblower protections for employees managing critical safety risks in ai systems create new accountability mechanisms for healthcare organizations. These protections recognize that ai safety often depends on technical staff who may face pressure to overlook potential problems or rush implementations.

Algorithmic impact assessments have emerged as a key regulatory requirement, mandating that healthcare organizations evaluate the potential consequences of ai deployment on different patient populations. These assessments must address bias prevention, equity considerations, and individual rights protection.

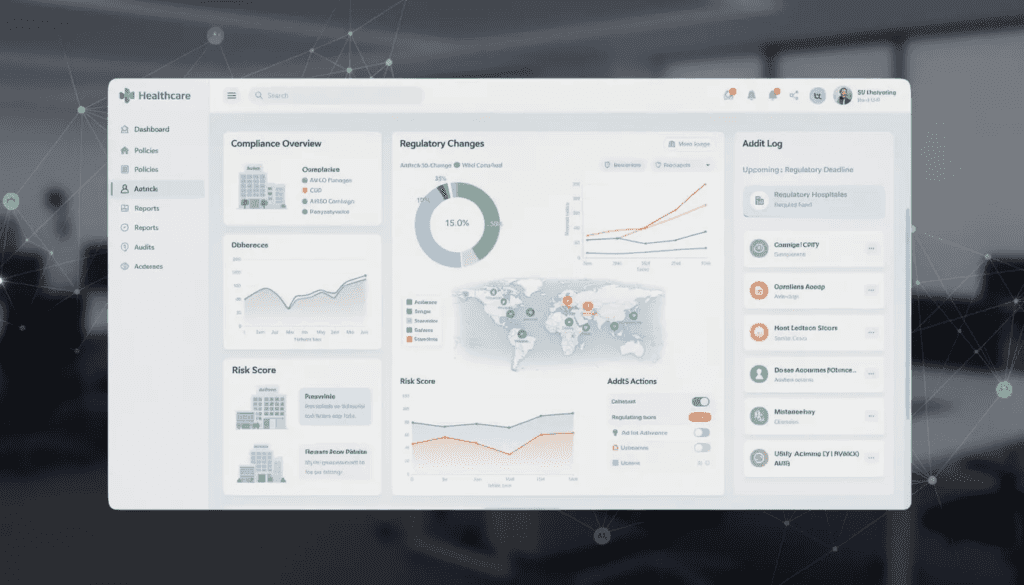

Post-market monitoring and real-world performance reporting obligations require ongoing validation of ai systems after deployment. Unlike traditional medical devices that remain static after approval, ai systems may drift in performance or encounter edge cases not present in training data, necessitating continuous oversight.

The regulatory frameworks increasingly require audit trails that document ai decision-making processes, enabling post-hoc analysis when questions arise about ai-influenced clinical decisions. These requirements create significant data management obligations for healthcare organizations.

Patient consent mechanisms have evolved to address ai-specific considerations, including clear disclosure when ai influences care decisions and options for patients to request human-only decision-making in certain circumstances.

International Harmonization Efforts

The World Health Organization has provided guidance supporting regulatory authorities in managing AI risks globally, emphasizing the need for internationally coordinated approaches to ai governance in healthcare. This guidance recognizes that health data flows across borders and ai systems developed in one country may be deployed worldwide.

US-EU voluntary AI code of conduct initiatives promote global regulatory convergence while respecting jurisdictional differences in healthcare systems and legal frameworks. These efforts aim to create baseline standards that can be adapted to local requirements while maintaining core safety and effectiveness principles.

Standardization efforts focus on promoting interoperability across healthcare systems, enabling ai tools developed under one regulatory framework to be more easily adapted for use in different jurisdictions. This reduces development costs while improving global access to beneficial ai technologies.

International professional organizations, including medical specialty societies and healthcare technology associations, play increasingly important roles in developing voluntary standards that influence formal regulatory requirements across multiple countries.

The challenges in creating unified international standards for ai medical devices include differences in healthcare delivery models, varying privacy and data protection requirements, and distinct cultural approaches to medical decision-making and patient autonomy.

Trade considerations also influence international harmonization efforts, as countries seek to protect domestic ai development while enabling beneficial international collaboration and technology transfer.

Future Regulatory Outlook

Expected regulatory developments in 2025-2026 indicate continued expansion of ai-specific requirements across all levels of government. Federal agencies are developing more detailed guidance for specific ai applications, while state legislatures continue introducing targeted legislation addressing emerging ai risks and opportunities.

Generative ai and large language models in healthcare represent emerging areas requiring immediate regulatory attention. These technologies raise unique challenges around content generation, potential for hallucination, and integration with electronic health records that existing frameworks don’t adequately address.

Industry recommendations emphasize the need for adaptive regulatory frameworks that can accommodate rapid ai evolution without stifling innovation. This includes calls for regulatory sandboxes that allow controlled testing of new ai approaches and iterative feedback between regulators and developers.

Anticipated changes in risk-based classification systems for healthcare ai applications reflect growing understanding of different ai technologies and their varying risk profiles. Future classifications may distinguish more precisely between different types of machine learning applications and their appropriate regulatory requirements.

The regulatory landscape will likely see increased convergence between cybersecurity, privacy, and ai-specific requirements, recognizing that these domains are interconnected in modern healthcare technology implementations.

Emerging focus areas include ai applications in clinical trials, real-world data collection, and population health management, each requiring specialized regulatory approaches that address their unique characteristics and risk profiles.

The future of ai in healthcare regulation will depend significantly on the ability of regulatory authorities to balance competing priorities: enabling beneficial innovation while protecting patient safety, promoting economic development while ensuring equitable access, and maintaining professional clinical judgment while leveraging ai capabilities.

Healthcare organizations and technology companies must prepare for this evolving landscape by developing robust compliance programs, investing in regulatory expertise, and engaging proactively with regulatory authorities as they develop new requirements. The organizations that successfully navigate these challenges will be best positioned to leverage ai technology while maintaining public trust and regulatory compliance in an increasingly complex environment.

As artificial intelligence continues transforming healthcare delivery, the regulatory frameworks governing these systems will play a crucial role in determining whether this transformation benefits all patients equitably and safely. Staying informed about regulatory developments and actively participating in the policy development process remains essential for all stakeholders in the healthcare continuum seeking to harness the potential of ai while fulfilling their obligations to patient welfare and public health.